I promised some followers of this blog that I’d post some thoughts on what I am looking at and the progress of my evaluations here at ipHouse for our virtualization products.

Unfortunately we also did an office move in the intervening period and guess who didn’t have time?

So here is where I am at.

TL;DR: Very happy with the Tegile storage appliance so far.

Tegile came out to my office on December 15th, 2011 and had shipped us one of their new dual controller units for evaluation, they call it Zebi. We had racked it up, cabled it up, and they spent their 10 minutes in the data center doing their setup magic. Yep, you read that right; only 10 minutes to set it up. The other thing that is not obvious right away is that they came out to my office for the setup of an evaluation unit. I have never met a hardware vendor who would do such a thing. Kudos!

These are some of the nicest people I have talked to dealing with any hardware vendor. And I’m not saying that cause they covered lunch for Nick and I but because I spent my whole day with them and never got bored or felt like I was being sales’ed to death. Technical questions and discussions were the norm. And of course we spent time just chit-chatting.

Their appliance (Zebi HA2000EP-A2) has 2 controllers and 16 bays for drives. The system is built on Solaris but with their own twist on how ZFS is going to store data (and the issues that come up with doing deduplication in primary storage and rebuild times with larger disk drives).

In a normal ZFS setup, each 1 TiB of storage requires about 8 GiB of RAM (perhaps on L2ARC). The Tegile folks did something different by extending their ZFS implementation to store meta-data and deduplication tables on SSD. This is addressing two issues:

- Deduplication of 1 TiB of storage requires ~8 GiB of RAM

- Rebuilding a failed drive will be sped up by having meta-data stored on a fast source device, like SSD

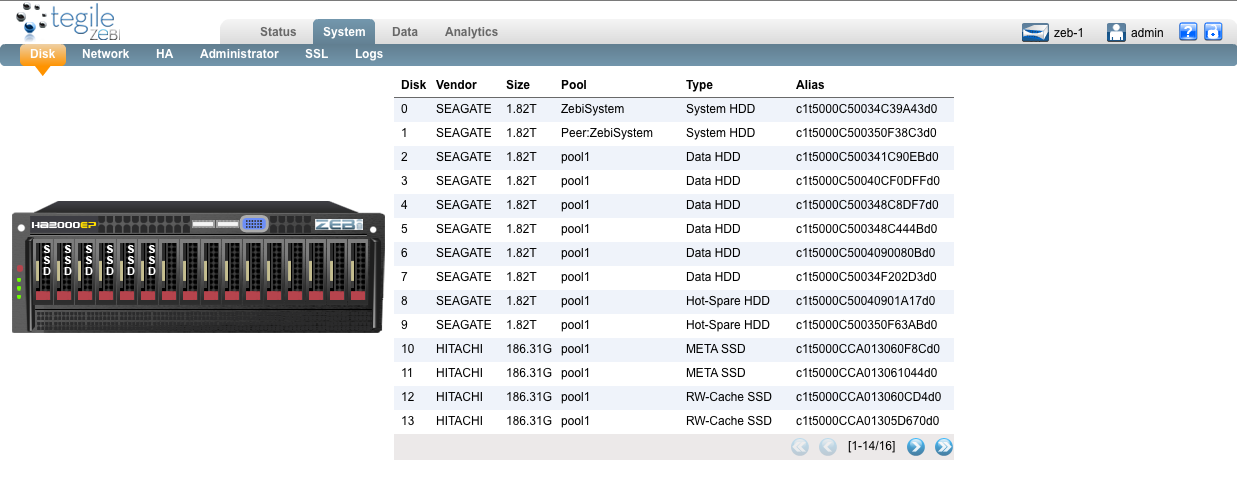

First thing you’ll notice in the disk layout below is the number of SSDs in the chassis. In fact there are 10 2 TiB SAS disks and 6 200 GiB SSDs (for read cache, ZIL, and the meta-data/dedupe tables). I like the ratio.

10 GiB of data-space was partitioned from the individual SSDs to use as the ZIL and then mirrored together – a very good practice.

The rest of the SSD storage is used as either read cache (4 slices of SSD, un-mirrored) or meta-data/deduplication table storage (2 slices of SSD, mirrored).

Volume layout is using mirrored pairs (by my choice) with 2 hot-spare drives.

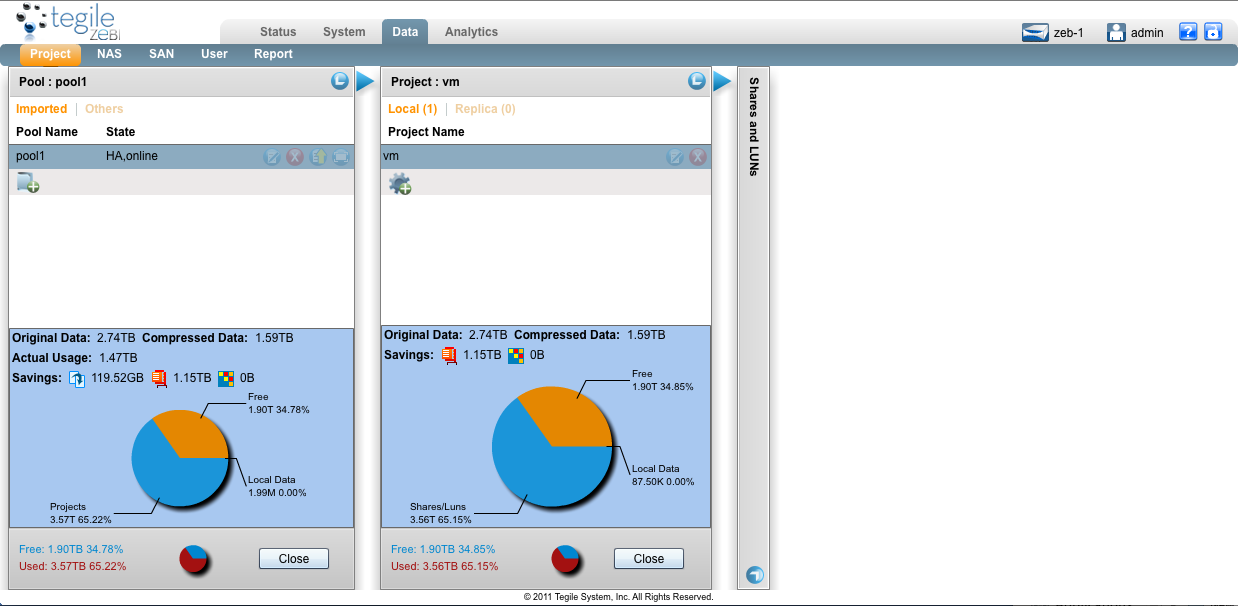

This next screenshot is showing the data page with information about the pool itself and the ZFS volume. On the right side I skipped extending it out because there are customer names listed. Sorry folks.

Look at the bottom left side.

The first number is the deduplication savings and the second is the compression savings. Original data storage is 2.74 TiB and we have reduplicated 119.52 GiB (7.34%) and compressed 1.15 TiB (42.01%) of used storage.

(the reason that a 5.5 TiB volume with 2.7 TiB in use minus dedupe and compression only has 1.9 TiB free is that there are other ZFS shares carved out that are using reserved space for my customers reducing the free space available for other things.)

Last picture is all about measurement and metrics and is a small snapshot of about 20 minutes while I did some OS patching on some Windows servers.

About measurement and metrics.. SNMP is not supported as of yet but is on the feature update list so at this time you must use the Zebi web interface to view the data.

Speaking of performance that you can not see above; I have these systems (remember, dual heads) configured with 2 1Gbps ethernet ports bonded together as one logical connection offering 2Gbps of throughput. I have had no problem saturating these ports. This is very easy to recreate as I have mounted the NFS volume on 3 VMware vSphere 5 servers and I can start concurrent Storage vMotion events from the physical servers to the volume mounted from the Zebi appliance.

I/O wise it has taken everything I have thrown at it without a hiccup and overall performance has been absolutely fantastic. These are a little harder to real-world test and graph. I have run up iometer twice so far, done a few bonnie++ runs with postmark running on other VMs but in the end it is all still synthetic load generation while the throughput paragraph above is real-world data that is easy to collect and reproducible.

I have had a single issue: 2 bad disk drives in the same slot which made me wonder about potential issues of the slot in the chassis but the third drive has worked just fine and has not tossed any errors at all according to Solaris via ‘iostat -en’.

Finally, the volume layout itself with space used listed including the snapshot bandwidth per device:

% zpool iostat -v pool1

capacity operations bandwidth

pool alloc free read write read write

--------------------------- ----- ----- ----- ----- ----- -----

pool1 1.47T 3.96T 55 1002 3.44M 11.1M

mirror 480G 1.34T 25 154 1.82M 1008K

c1t5000C500341C90EBd0 - - 13 29 1.20M 1005K

c1t5000C50040CF0DFFd0 - - 1 37 115K 1.58M

mirror 515G 1.31T 13 160 822K 1.21M

c1t5000C500348C8DF7d0 - - 6 34 536K 1.21M

c1t5000C500348C444Bd0 - - 6 34 535K 1.21M

mirror 515G 1.31T 13 163 820K 1.21M

c1t5000C5004090080Bd0 - - 6 33 535K 1.21M

c1t5000C50034F202D3d0 - - 6 34 535K 1.21M

logs - - - - - -

mirror 60.5M 9.88G 0 58 0 2.96M

c1t5000CCA013060CD4d0s0 - - 0 58 0 2.96M

c1t5000CCA01305D670d0s0 - - 0 58 0 2.96M

mirror 60.3M 9.88G 0 58 0 2.96M

c1t5000CCA013060F08d0s0 - - 0 58 0 2.96M

c1t5000CCA013060D88d0s0 - - 0 58 0 2.96M

meta - - - - - -

mirror 8.26G 178G 3 407 17.9K 1.75M

c1t5000CCA013060F8Cd0 - - 1 296 43.2K 1.75M

c1t5000CCA013061044d0 - - 1 296 42.7K 1.75M

cache - - - - - -

c1t5000CCA013060CD4d0s1 176G 8M 1 2 193K 300K

c1t5000CCA01305D670d0s1 176G 8M 1 2 192K 299K

c1t5000CCA013060F08d0s1 176G 8M 1 2 193K 299K

c1t5000CCA013060D88d0s1 176G 8M 1 2 192K 299K

--------------------------- ----- ----- ----- ----- ----- -----

When you check them out – tell them you read about it on this blog. I won’t get any type of kickback but I think they would appreciate where their leads are coming from. I know I do in my business.

Thanks for reading!

Comments are closed.