Here is the second installment of my series of posts dealing with storage that I am evaluating at ipHouse for our virtualization products.

I have been a busy boy the past couple of weeks.

Tintri came out to my office on February 20th, 2012 to help get a T540 set up on my network and to show off the features. We received the hardware on the 15th. Doug racked it up, cabled it, and we waited for Monday. Like other appliances the setup only took 10 minutes. Quick, painless. I wish everything was this smooth.

TL;DR: Excellent, predictable performance built for VMware infrastructure.

Few things right off the bat that those looking at Tintri may want to know.

- This is an appliance that I refer to as a ‘one trick pony’ and I don’t mean it to belittle the product but to describe the simple fact it was created to do one thing and one thing only. My tests show it does that one thing very well.

- Referring to the above: there are no upgrades available. The configuration is static.

- There are two controllers in operation as active/passive. Their reason for this is quite sound – in the case of a failure do you want to run with full performance or do you want one controller now burdened. Makes sense to me.

- This is not a general purpose file server. In fact, at the time of this writing, it is only NFS and only integrates with VMware vSphere via vCenter. Integration with vCenter isn’t required but it gives you insight to your virtual machine performance.

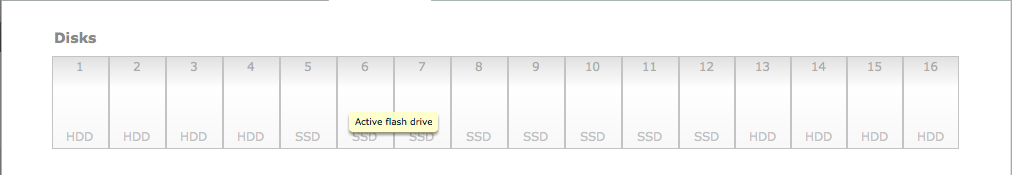

The appliance (T540) has 2 controllers and 16 bays for drives. The system is a custom Linux system with an in-house built filesystem. SSDs are the primary storage (there are 8 in the chassis) with 8 more 3 TiB hard disks (16 slots total).

There are 2 1Gbps ethernet ports per controller and they run in active/standby for management.

There are 2 10Gbps copper ethernet ports per controller, and like management they are in active/standby.

You only configure the active controller. The standby controller receives all of its configuration from the active controller.

Deduplication and compression are not adjustable. Period. This is a design consideration, one I agree with for this product.

Here is the disk layout from the UI, and of course it matches the physical as well.

I mentioned above that there is no tuning involved. That is also the case with the filesystem or RAID volume layout. The system is already optimized for virtualization and there are no user tunable knobs or switches.

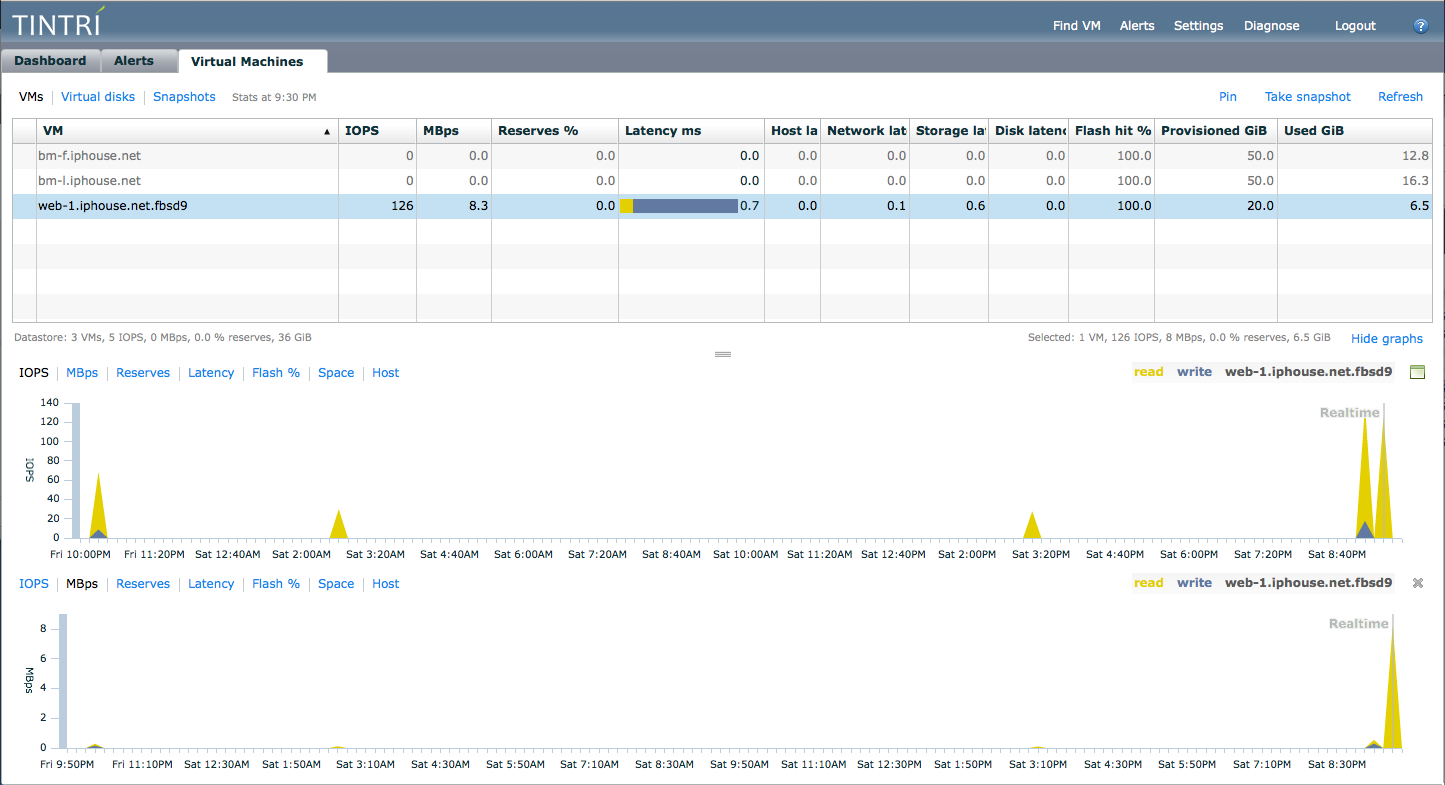

You can look at VMs directly including performance measurement of each VM or the individual VMDKs. This is also the user interface that gives the option to ‘pin’ a virtual disk into flash. Be careful with this or you could actually make your performance worse by over ‘pin’ing VMDKs instead of letting the appliance decide what is best.

There are only 3 VMs on this storage at this time. The bm-f machine is FreeBSD 8-STABLE, bm-l is Ubuntu 10.04 LTS, and web-1 is a test deployment for updating our FreeBSD 8-STABLE web servers with FreeBSD 9-STABLE. I used the first two systems in my testing and benchmarking with results to be posted at a later date.

About measurement and metrics: SNMP is not supported which limits you to using the web interface. I came away from my discussions with the impression that SNMP is coming in a later release.

This appliance does exactly what it says it will do; give you excellent performance for your virtualization infrastructure without a lot of management or IT hours invested.

It is also the only storage I have touched that gives you insight into where potential latency may lie. During my testing I could watch the host latency jump up while the normalized network, disk, and storage latency stayed within reasonable limits. What does this mean? I was able to see higher host latency than network, disk, or storage when I pushed the virtualization layer hard. This matters because you can now track down performance issues via 4 different points of reference. At the time of this writing I know of no other storage system that can do this.

The interface is very simple and straight forward. As there is no direct tuning you are left with only basic items to configure. Like outgoing SMTP server for notification and vCenter credentials. Simple, effective.

Two limitations:

- The system doesn’t support exporting of separate mount points with different parameters, like allocation quotas. For a service provider this is something that I have had to take into consideration and is the only true negative aspect of the unit. You could create directories under the /tintri mount point to get some separation if you want/need to, though the user interface itself doesn’t have any type of permissions to create administrative domain separation.

- It also only has 1 network for exporting of its single NFS volume so plan accordingly.

Contact them and get an evaluation. You will not be disappointed. Check them out – tell them you read about it on this blog! (no, I don’t receive anything for these recommendations but all of us love to know where people find us on the web)

Thanks for reading!

Comments are closed.